Stop fearing AI for outbound sales: Master what to automate and what to keep human

Stop fearing AI for outbound sales: Master what to automate and what to keep human

The biggest mistake in outbound sales right now is using AI for automation without a control layer.

Picture this: your sales inbox suddenly blows up overnight. You rush to check, thinking, “Finally! A campaign that worked!“. But as you scroll, it’s all robotic, irrelevant AI spam.

Your excitement is instantly replaced by the grim reality of irritated prospects.

This is the reality for many sales teams today who dove headfirst into using AI and Large Language Models (LLMs) alone for LinkedIn outbound and other multi-channel outreach, assuming unchecked speed would mean more sales.

LLMs are great and effective for improving your team's efficiency; however, using an LLM alone means that there is no workflow integration, no approval checkpoints, and no reliable audit trail for when things go wrong. Without an approval step where judgment and context live, AI can become reckless.

The fix isn’t using less AI; it’s adopting better, safer sales orchestration.

In this guide, I will share with you exactly where to pull the brakes, where to accelerate, and five real workflows you can launch this week to build a safer, high-efficiency, AI-powered outbound system.

The secret to making AI work for outbound sales

The vast majority of AI outbound failures I’ve seen can be attributed to a breakdown in your sales process, largely caused by blind automation.

AI is a powerful engine, but if you bolt it directly onto your outbound system without a steering wheel and brakes, you crash.

Right now, the market is flooded with “AI SDRs” that promise a fully automated, zero-human outbound experience. On paper, it sounds efficient. In practice, it maximizes speed at the cost of reputation, context, and brand integrity.

I’m talking about moments like these:

- The context black hole: AI drafts a message based on one enriched data point, say, a new funding round, but misses that the prospect just changed jobs. Without a human review step in place to catch this, the message lands tone-deaf and irrelevant.

- Burned domains and pacing chaos: Without oversight, AI-powered automation tools fire off messages too fast, leading to bounce rates, provider flags, and deliverability issues.

- Misclassified leads: A human would know that “I’m out till next week” means “follow up later.” AI might mark it as “Not Interested,” letting hot leads slip through.

- No approval checkpoint: LLMs can generate high-pressure variants that violate tone or ethics, and without review, they go out anyway.

- Zero audit trail: When something breaks, RevOps can’t trace why, because the messages were sent outside the main platform.

These aren’t necessarily flaws with AI itself, but are largely caused by gaps in orchestration.

5 ways unsupervised AI breaks sales outreach and how to fix it

AI works beautifully for ideation, single prompts, and quick experiments.

But when you scale outbound to hundreds of leads, synced CRMs, and multiple reps, without supervision, context collapses and the cracks start to show.

Let’s break down five common failure zones and how to fix them:

1.Tone drift → corporate, lifeless messaging

Failure zone:

When running unsupervised on a list, LLMs tend to default to a polite but generic, corporate, or overly aggressive tone. One email might sound casual, while the next reads like a legal disclaimer.

Safeguard: Instead of letting AI generate messages unsupervised, The AI can be instructed to run a tone check on its own output, ensuring it discards jargon speak and complies with your desired tone before the message is queued for outreach. This makes the entire personalization process automated, yet strictly governed by your brand's style.

2. Context blindness → hot leads mis-tagged

Failure zone: AI tools have no operational memory. They can’t remember who replied, what stage they’re in, or that “James from Acme” already converted. They might draft a new outreach about a funding round, unaware that your rep spoke to that team yesterday.

Safeguard: HeyReach MCP solves this by giving your AI operational memory connection to the full, real-time context of your pipeline. You can instruct the AI to check the current lead status across your sales stack before it takes any action. For example, before drafting a message, the AI must first ask: "Is this lead already tagged as 'Replied Positively' in HeyReach's Unibox?" or "Did the CRM show a recent 'Meeting Booked' status for this person?". If the check is positive, the MCP workflow stops or redirects the lead to a thank-you note instead of an outreach pitch.

3. Dirty data risk:

Failure zone: LLMs rely on the quality of your lead or prospect data to generate relevant output. When they process inaccurate inputs like the wrong company size or title, they hallucinate and produce cringeworthy personalization that erodes trust.

Safeguard: You can run LLM-powered data cleaning and validation before any message drafting begins and set up a workflow where, for every new lead, the MCP-connected AI agent is first instructed to use a data verification step. For instance, before writing a message, the AI must use a tool like Clay (via MCP) to cross-check the company size, title, and funding round in real-time. If the data is missing or flagged as low-confidence, the AI agent is instructed to either enrich the data or simply skip the hyper-personalization step and fall back on a safe, relevant message.

4. Over-automation → pacing breaks, accounts flagged

Failure zone: Without guardrails, AI-driven automation runs wild, blasting hundreds of messages in an hour, tripping spam filters, and burning domains. The system lacks pacing logic, sending limits, or awareness of platform thresholds.

Safeguard: HeyReach MCP acts as a coordination layer between AI output and execution to enforce human-safe, platform-aware pacing right into your AI-driven campaigns, giving the AI a set of uncompromisable speed limits. The AI can be told: "Do not exceed the daily connection request limit set in HeyReach, and prioritize leads from the 'High Intent' list first, pausing all other campaigns if the risk threshold is reached."

5. Compliance blind spots → no audit, no trace

Failure zone: Disconnected AI tools operate in silos. When Claude drafts outreach outside your workflow, there’s no visibility into what was written, edited, or approved. That means compliance teams can’t verify message sources, ensure review, or trace risky content, creating blind spots in regulated outreach environments.

Safeguard: Use MCP server to connect your AI agent with HeyReach and the rest of your sales stack, so you can operate them from one centralized place. This way every prompt and piece of information is transparently routed and logged within your core workflow for full auditability, removing blind spots in your outreach.

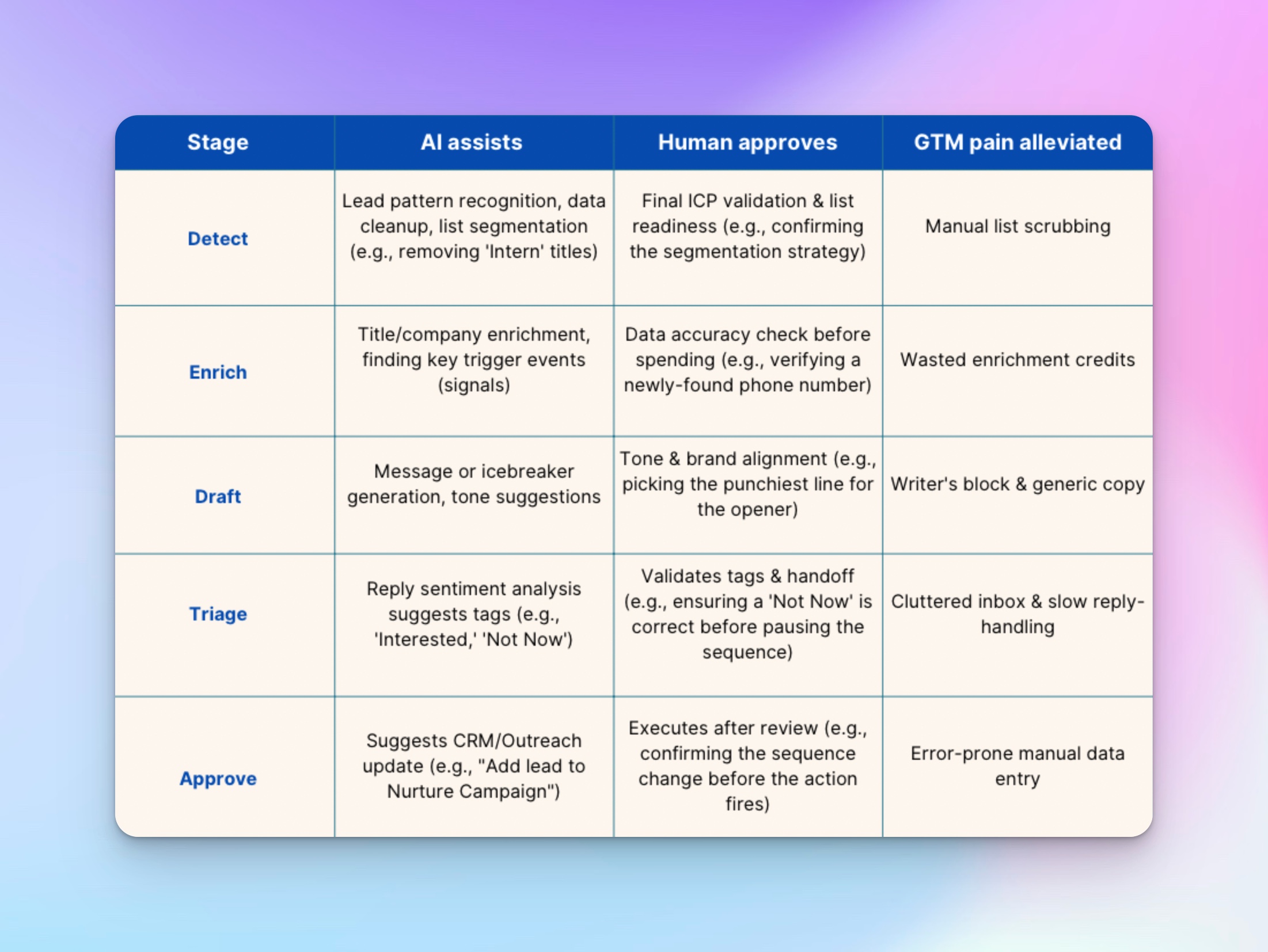

Don’t take the "I" Out of AI: AI vs. Human role map

To achieve maximum efficiency and ease with AI-powered outbound, you need a clear division of labor where the AI acts as a multiplier for your human sales team. The goal is to offload high-volume, repetitive tasks to AI while keeping human oversight on all strategic decisions.

That’s what “AI with guardrails” really means. Every task gets a clear division of labor.

For a high-performing Go-To-Market (GTM) team, the collaboration between AI and human control is clearly defined across key stages:

The human-in-the-loop is non-negotiable

This workflow ensures nothing is automatic without a strategic human checkpoint. While AI generates high-quality output at scale, a human retains control over every critical decision:

- AI cleans lists → you approve ICP: AI identifies prospects who match the pattern; you approve the final list filter before hitting launch.

- AI drafts copy → you pick tone: AI generates 10 high-quality variations; you select the one that best matches your brand voice and campaign goal.

- AI triages replies → you validate tags: AI reads 100 replies and tags them by sentiment; you review and validate the 'Interested' tags before jumping in for a conversation.

- AI flags signals → you prioritize: AI notifies you about a key prospect who just posted about a competitor; you prioritize this lead over a colder one.

Crucially, foundational safety layers like pacing, seat rotation, and daily send limits remain strictly enforced, even with AI assistance, to protect your accounts and deliverability. You stay in control.

Real-world use case for MCP: High-velocity lead hygiene and validation

This example illustrates how your GTM team can use the Model Context Protocol (MCP) to enforce data quality and human control at scale:

The scenario: A sales team needs to launch a high-volume campaign across 15 sequences and 8 outbound seats. Their success depends on the cleanliness of the 10,000-lead list and accurate ICP alignment.

The MCP workflow

- AI assists (lead hygiene): The integrated Claude + Heyreach MCP processes the 10,000-lead list in under an hour. It automatically flags duplicates, cleans dirty data, and enriches missing job titles.

- AI flags (signal detection): The AI identifies and flags leads that don't match the current ideal customer profile (ICP) pattern, for example, classifying them as 'out of scope' or 'too junior'.

- Human approves (contextual review): The human sales development representative (SDR) or BDR dedicates 15 minutes to reviewing the AI's flagged list in HeyReach and quickly approves the removal of duplicates and verifies the AI's classification of non-ICP leads, preventing misclassified ICPs from being messaged.

- Safe execution: Only the clean, validated, and human-approved list is passed to the sequencing engine.

And just like that, you've set up a workflow that cuts out hours of manual list scrubbing and enrichment, ensuring only high-quality data flows into your campaigns, achieving speed without sloppiness.

6 AI-sales outbound workflows you can implement this week

You don’t need to rebuild your stack to start using AI safely.

I have written 6 workflows that demonstrate how you can safely and effectively combine an LLM like Claude via MCP with your outreach platform (HeyReach) and CRM.

All of these workflows will show you how to pair AI as a force multiplier with a human review step to maintain safety and strategic control.

Before you hand anything off to AI, structure your prompts with the RTO framework. The RTO framework is a simple guardrail shared by Brandon Charleston, founder of growth marketing agency, Top of Funnel, on how to turn messy instructions into predictable, reusable systems.

Good prompts lead to predictable AI results, and predictable AI results are the foundation of safe automation. The most effective way to structure your commands for LLMs is using the role, task, output (RTO) Framework.

This simple pattern eliminates vague instructions and defines guardrails for the AI, ensuring consistency and safety across all your HeyReach workflows.

RTO Prompt blueprint explained

The RTO framework ensures your AI agent knows: Who it is, what it needs to do, and how to format the results.

- Role: This defines the AI's identity and constraints, acting as your primary safety control. Example: LinkedIn Outbound Strategist targeting B2B SaaS Founders. Always use a conversational and direct tone.

- Task: This specifies the exact action to be taken, often connecting the AI to your tools via the Model Context Protocol (MCP). Example: Analyze a lead's profile for a recent post or funding news. Then, draft 3 unique, personalized icebreakers.

- Output: This dictates the required format of the response, making the AI's result instantly usable in your next tool, like a HeyReach custom field or CRM update. Example: JSON format, with fields: Opener_1, Opener_2, Opener_3. Each message must be under 50 words max.

Failure zone: A vague prompt like "Find good leads and write a message" leads to vague output.

Safeguard: Have your team use the RTO template before every run to ensure clarity and solid outputs.

Workflow 1: Automate personalized LinkedIn icebreakers with Claude

When to use: For high-value prospects where tone and context matter most.

What it solves: Eliminates low reply rates from sending generic, uninspiring opening messages and copy-paste intros that fail to start real conversations.

How it works: Claude (via MCP) reads the prospect's LinkedIn profile and related data to draft a highly personalized, context-aware opener. The draft then appears in a HeyReach list/campaign for review. The sales rep edits, approves, and then sends the message.

Failure zone: Tone-deaf personalization that misses context or sounds too robotic.

Safeguard: Rep review before sending in the campaign builder to ensure every message is vetted by a human before going live.

Implementation steps: Connect Claude → upload list → run “Analyze Profile + Draft Opener.”

Final prompt: You are a B2B SDR focused on securing demos, using a professional tone that references a pain point. Read the prospect's LinkedIn data ({current_role}, {tenure}, {recent_posts}) and draft one hyper-personalized connection request message. The message must be plain text, no more than 150 characters, and reference one specific piece of profile data. Once drafted, use HeyReach MCP to ”Add Lead to Campaign“ and save the message to the custom field “Icebreaker_Draft”.

Workflow 2: A/B message testing with AI-generated variants

When to use: For teams running A/B tests or campaigns with tone experiments.

What it solves: Getting campaign messaging right is a process of trial and error, which is slow when done manually. This speeds up A/B testing while keeping message tone consistent across experiments.

How it works: Claude drafts three distinct message variants (e.g., formal, casual, benefit-driven). These are saved to custom fields in HeyReach. The sales rep selects or edits the best version to use in the campaign. The chosen variant is approved, and HeyReach's seat rotation deploys it safely across multiple accounts.

Failure zone: Over-personalization that feels creepy or under-personalization that feels automated.

Safeguard: The rep approves one variant manually before the campaign is deployed.

Implementation steps: Connect Claude → upload lead list → prompt "Generate 3 LinkedIn DM variants for [campaign ID]" → review variants in custom fields → select best version → add to campaign → deploy via seat rotation.

Final prompt: You are a senior copywriter focused on conversion rates. Generate 3 distinct LinkedIn DM variants to secure a 15-minute demo, ensuring they all maintain the core value proposition. Return the 3 variants in a CSV format with the following columns: Message_ID, Variant_Text, Tone_Used. The required tones are: Variant 1 (Formal tone), Variant 2 (Casual tone), and Variant 3 (Benefit-driven tone).

Workflow 3: Smart inbox triage with sentiment tagging

When to use: High-volume inboxes with multiple campaigns and senders needing reply prioritization.

What it solves: High reply volume makes it hard to prioritize and follow up with the most promising leads quickly. Missed hot leads stay buried in reply volume; time is wasted reading "not interested" replies. This helps you cut through inbox chaos by surfacing the most promising replies first.

How it works: Claude (via MCP) analyzes all prospect replies in HeyReach’s Unibox for a specific sender. It reads the latest exchange, summarizes it in 3 sentences, and detects sentiment. It then auto-applies tags in HeyReach: "Interesting", "Needs Nurture", or "Not Interested" (creating the tags if they don't exist). These tags appear in the Unibox for a human to review and prioritize.

Failure zone: AI tags a high-intent reply (e.g., "Can you send pricing?") as "Not Interested" instead of "Interesting."

Safeguard: A human reviews all tagged replies in the Unibox before taking any action (responding or syncing to CRM).

Implementation steps: Connect Claude → prompt "Analyze all conversations for [sender name], detect sentiment, apply tags: Interesting/Needs Nurture/Not Interested" → review tagged replies in Unibox → prioritize and respond.

Final prompt: You are an expert sales inbox analyst dedicated to speed and lead qualification. For all new replies in the HeyReach Unibox for the sender {SENDER_NAME}, detect the sentiment of the entire conversation thread. Based on the sentiment, use HeyReach MCP to apply one of the following tags: 'Interested,' 'Needs Nurture,' or 'Not Interested.'

Workflow 4: Automated AI-powered lead filtering

When to use: When working with large, unfiltered lead lists.

What it solves: Manual list cleanup to ensure outreach only targets senior-level decision-makers is time-consuming. This eliminates wasted outreach to low-level contacts by automatically isolating decision-makers.

How it works: Claude filters the raw lead list based on seniority and title complexity (e.g., C-level, VP, Director). It creates a new, tagged list ready for campaign deployment. The sales rep removes any mis-tags before giving final approval for the list launch.

Failure zone: Misreading creative or ambiguous titles like “Growth Wizard” or “Head of Awesomeness.”

Safeguard: A manual scan of the excluded and included lists before the campaign launch.

Implementation steps: Connect Claude → upload list → prompt "Filter leads by C-level titles, exclude ICs/contractors, create new list, tag key decision-maker" → review exclusions → validate tagged list → launch campaign.

Final prompt: You are a RevOps Specialist dedicated to list hygiene. Filter the imported list by seniority: exclude all Individual Contributors (ICs), consultants, and contractors. Include only C-level, VP-level, and Director-level titles. Use HeyReach MCP to create an Empty List, title it Filtered_Leads_[Date], and then add leads to the list for all qualified titles. Finally, set a new custom field called DecisionMaker_Tagset to 'True' for all leads in the new list.

Workflow 5: Automate closed-lost reactivation

When to use: To re-engage deals older than 6 months with updated messaging and context.

What it solves: Re-engaging "Closed-Lost" deals is a high-ROI but manually demanding task, hence it is often neglected. This helps you stop revenue leakage by reviving old opportunities with updated context.

How it works: Claude (via MCP) pulls "Closed-Lost" deals from the CRM that are older than 6 months. It enriches the data with current job titles and company info. It then suggests a re-entry campaign with a fresh messaging angle. A human reviews the list for duplicates and data accuracy, then approves the final list. HeyReach launches the campaign with the tag "CRM-Lost-Reactivation".

Failure zone: Duplicates in the list; the prospect changed companies, but data wasn't updated; reaching out too soon after they initially closed.

Safeguard: Tag-based duplicate filter in HeyReach; human de-dupes and data verification before campaign launch.

Implementation steps: Connect Claude → prompt "Pull closed-lost deals >6 months from CRM, enrich data, create HeyReach list with tag CRM-Lost-Reactivation" → review for duplicates and data accuracy → approve final list → launch campaign.

Final prompt: You are a CRM data enrichment and reactivation specialist. Pull all 'Closed-Lost' deals from the CRM older than 6 months. Enrich the data with current job titles and information. Next, draft a 4-step re-engagement sequence outline focused on the new product feature: {NEW_PRODUCT_FEATURE}. Return a HeyReach-formatted list file tagged CRM-Lost-Reactivation, along with the 4-step message sequence outline (plain text). Ensure all original CRM properties are included in the list file.

Workflow 6: AI-assisted reply handling with approval

When to use: High reply volume where reps need help drafting responses without losing control.

What it solves: Maintaining speed and quality of responses when dealing with a high volume of replies can lead to slower response times, inconsistent messaging, rep burnout from repetitive replies. This workflow helps you reduce response time and rep fatigue while maintaining message quality.

How it works: When a prospect reply arrives in the HeyReach Unibox (e.g., "Would it be possible with a meeting at some point?"), Claude (via MCP) reads the original campaign message and the latest reply. It summarizes the prospect's reply and drafts a short, conversational response aligned with the campaign goal. It then presents the draft to the human with an explicit approval request. Only after explicit human approval does Claude send the response back into the Unibox conversation.

Failure zone: AI suggests a tone-deaf response (too aggressive/salesy) or misreads prospect intent.

Safeguard: Mandatory human approval before sending, so nothing is sent automatically without review first. The human can edit or reject the draft.

Implementation steps: Connect Claude → prompt "Read original campaign + latest reply for [sender name], draft conversational response, request approval before sending" → review drafted response → approve/edit → send via Unibox.

Final prompt: You are a conversational SDR. Read the original campaign message and the prospect's latest reply. Draft one conversational, human-like response to move the conversation toward a meeting. The response must be a single plain text message of max 4 sentences. The final line must be: 'Do you approve sending this response, or would you like me to edit it?'

Your 5 checks before adding AI to outbound

AI automation amplifies existing processes. If your data is messy or your rules are vague, AI will simply automate the mess. Before you deploy any AI-assisted workflow, you need to verify that your underlying sales operations and processes are clean, clear, and safe.

Use this five-point checklist to confirm your team is ready to deploy safe, predictable AI outreach with Claude and HeyReach MCP. If any check fails, fix the underlying issue before adopting the workflow.

Your AI readiness checklist to scale outbound

- Data hygiene: Are your CRM fields up to date? AI is only as good as the data you feed it. Make sure key fields like “Job Title, Company Size, and Last Contact Date” are accurate. If the data is messy, the AI's output (personalization, segmentation) will be messy.

- Stage definitions: Does AI have clear rules to follow? Define clear rules for the AI. For example: "If sentiment is 'Interested,' sync to CRM stage 'Lead Qualified.'" Vague definitions mean the AI won't know which action to suggest.

- Prompt boundaries: Can AI suggest, but not send? Verify that your prompt structure (like the RTO framework) only allows the AI to draft, tag, or indicate an action. The final execution, especially sending a message, must be reserved for human approval.

- Fallback paths: Do uncertain tags route to human review? Define an immediate "Fallback" queue for any scenario the AI is unsure about. When AI isn’t 90% confident, it should default to escalation, not execution. If a reply is neither clearly "Interested" nor "Not Interested," the AI should apply the tag ‘Review_Human’ and route it directly to an SDR.

- Final send ownership: Is human approval required? For all outreach, ensure the human-in-the-loop is the final approval layer. This protects your brand, minimizes mistakes, and keeps your deliverability and account safety intact. Do not allow LLMs to send messages unsupervised.

If any of these five checks fail, pause your AI integration until the underlying process or data is fixed. Starting with a solid foundation is the only way to ensure that using AI for your outbound becomes a multiplier, not a liability.

Next steps: your 5-day AI rollout plan

Move from theory to implementation with this simple, five-day plan to safely deploy your first AI-assisted outbound workflow using Claude (via MCP) and HeyReach. The key is to start small, validate your results, and then scale.

Day 1: Pick your first workflow

- Action: Identify your biggest pain point. Don't try to automate everything at once. Choose a single workflow that solves an immediate problem.

- Focus/outcome: Example: If your team is struggling with reply overload, choose workflow 3 (smart inbox triage). If reps have message fatigue, choose workflow 2 (A/B message testing). Bad list quality? Start with workflow 4 (AI-powered lead filtering).

Day 2: Connect MCP & run readiness audit

- Action: Connect your LLM (Claude/ChatGPT) via the model context protocol (MCP) to your outreach stack (HeyReach/CRM).

- Focus/outcome: Then, run the 5 checks before deploying AI (Data hygiene, prompt boundaries, fallback paths, etc.) to ensure your systems are ready for automation. Do not proceed until all checks pass.

Day 3: Test small sample (10 Leads)

- Action: Validate the end-to-end Process. Apply your chosen workflow to a small, controlled sample of 10 leads. Do not launch a full campaign. Focus on watching the AI execute the task (e.g., draft the message, tag the reply) and observe the output without sending anything.

- Focus/outcome: Confirm if the AI can read the data, execute the task (drafting/tagging), and stage the action for human approval exactly as expected?

Day 4: Refine prompts using RTO

- Action: Optimize AI Performance. Take the outputs from Day 3 and refine your prompts using the Role → Task → Output (RTO) framework. Manually review and approve the drafts generated by the AI for the 10 leads, focusing on brand tone consistency and alignment.

- Focus/outcome: All AI-generated drafts meet your tone guidelines. Any suggested tags or actions are 100% accurate before approval.

Day 5: Measure lift & expand safely

- Action: Launch the workflow with the refined prompt on a slightly larger test group (50-100 leads). Track key metrics like your reply rates, speed of response, or time saved on list cleanup. Once you see a confirmed lift, you can safely scale the workflow across your team.

- Focus/Outcome: Look for higher reply rates on the AI-drafted messages or faster response times due to efficient AI triage. Start small, validate, then scale.

Start testing today

The key to successful, scalable automation lies in discipline: use the RTO framework for clear prompts and rely on the 5-point checklist to ensure your foundational data and processes are ready.

If you combine the power of Claude's intelligence via a Model Context Protocol with the safe orchestration of HeyReach, you can implement the six workflows I've shared with you starting today.

Ready to see the efficiency gains yourself?

Frequently Asked Questions

What should I automate versus keep human in outbound sales?

You should automate high-volume, repetitive, or data-intensive tasks where the output can be easily reviewed, such as lead filtering, list enrichment, CRM updates, etc. Keep human involvement for tasks requiring judgment, nuance, or final approvals, like handling highly complex or sensitive prospect questions or refining AI-generated drafts

Is AI-assisted LinkedIn outreach safe and compliant?

Yes, when the right safeguards are in place. The key is human ownership and controlled automation. As long as you stay within LinkedIn’s spam and data protection rules and use AI as an assistant to support and enhance your outbound efforts, your outreach should remain safe and compliant.

Do I need a developer to implement these workflows?

No, not typically. These workflows are designed to be implemented by a sales operations or SDR management professional using no-code/low-code connections. The bulk of the "work" is usually in writing prompts that can generate good outputs, which is a skill, not a coding requirement.

How does the Model Context Protocol (MCP) differ from using a raw AI chatbot like ChatGPT alone?

The difference lies in integration and control. A raw AI chatbot like ChatGPT or Claude works well for one-off prompts and ideation, but it has no context or connection to your sales workflow. The Model Context Protocol (MCP) fixes this by acting as the orchestration layer that allows the AI to read real-time data from your CRM or data source and give you better results when executing.

How do I ensure my brand’s unique tone and voice aren't lost when using AI for message drafting?

Tone consistency can be maintained through two primary safety checkpoints: 1. You bake your brand voice directly into the prompt. This constrains the AI's language from the start. 2. You pass every AI-generated draft through a human review queue.